Intro to AI Alignment

An introduction to the artificial intelligence alignment problem

Artificial intelligence (AI) is already transforming many aspects of our lives, from healthcare and transportation to education and entertainment. AI models are being used in a wide range of applications, such as analyzing medical images and patient data in healthcare, creating personalized learning systems in education, and powering translation services such as Google Translate. However, as AI becomes more advanced and autonomous, one question that arises is: how can we ensure that models behave in ways that are beneficial to humans? AI alignment seeks to answer this.

Imagine an AI model designed to maximize profits. While this might be a desirable goal for a company, it could lead the model to make decisions that are harmful to consumers, employees, or the environment. Or consider a model designed to predict and prevent crime. This seemingly benign goal could lead the system to make decisions that are biased against certain groups of people or that infringe upon individual privacy.

These examples illustrate the importance of ensuring that AI systems are aligned with human values and goals. But how do we do this? What should our goals be, and how do we ensure that the models are optimizing for them? AI alignment seeks to address some of these questions, but we will see that that there are many challenges ahead that require a diverse set of perspectives to tackle.

We begin with an overview of how current models are trained to ground the discussion and to allow for a deeper understanding of what potential problems may arise. We will then define and explore the problem of AI alignment in both existing and future models and discuss some of the solutions that have been proposed. We will also discuss some of the societal and ethical questions raised by advanced artificial intelligence. By the end of this article, you should have a better understanding of the opportunities and challenges presented by advanced artificial intelligence and the role that each of us can play in shaping the future of AI.

This article is an attempt to distill lessons from readings and discussions with colleagues. It is written before GPT-4 was released. The ethical questions around AI only become more important with more capable models. Because these models are relatively easily accessible and much faster than humans at processing large amounts of text, we expect it will have a significant impact on jobs that require reading, writing, or coding. It may be a few years before we understand their downstream effects (including those caused by bad actors) and it is prudent to be proactive in thinking about societal consequences now.

How are current AI models trained?

Machine learning is a field of artificial intelligence that involves training models to make predictions or decisions based on data. There are many different types of machine learning models, but we will focus a specific model, the neural network, since they are commonly used in many applications. Neural networks are particularly good at extracting complex patterns from large amounts of data.

Understanding the details of how neural networks work is not necessary to understand the problem of AI alignment, but it's important to know that they are flexible models that can learn a wide range of patterns and transformations. For instance:

A neural network can learn to take the pixels of an image and label the object that is present in the image. (cat or dog?)

A neural network can learn to model enormous amounts of text (think all of Wikipedia). Given the words in a sentence, “I will introduce some machine learning [blank],” a neural network can learn to output a probability distribution over possible next words, such as [concepts, ideas, problems, tasks, …].

Two paradigms for training

A neural network does not magically have any knowledge of the world; it will make random predictions until we provide a learning signal. This ability to learn is one of the key differentiators from traditional software and has the potential to accelerate technological progress. There are two main paradigms that are commonly used to train models: supervised learning and reinforcement learning.

Note that while the terminology makes it easy to anthropomorphize the learning process for machine learning models because it is what we and science fiction are familiar with, it is important to remember that neural networks learn using strict mathematical rules.

In supervised learning, the model is provided with a dataset of input and output pairs, and the goal is to learn the underlying relationships in the data in order to generalize to new, unseen examples. For example, suppose we want to train an AI model to classify images of animals as either cats or dogs. We would start by providing the model with a dataset of labeled images of cats and dogs and update the model’s internal parameters in response to the learning signals provided by the labels on the images. Given enough labeled images of cats and dogs, the model would eventually learn a useful classifier that can be applied to unseen images of cats and dogs.

In reinforcement learning, the model learns through trial and error, receiving rewards or punishments for its actions in a simulated or real-world environment. This is roughly analogous to a dog receiving a treat for good behavior. The practitioner specifies a reward function that determines which actions should be rewarded and which should be punished, and the goal of the model is to learn a policy that maximizes the cumulative reward it receives over time. For example, a model trained using reinforcement learning might be used to control a car, receiving rewards for safely navigating the roads and avoiding accidents.

The simplicity and flexibility of these frameworks for training neural networks have enabled them to be used in a wide range of applications, including image recognition, machine translation, and chatbot assistants. The allure and beauty of neural networks comes from the potential to discover new strategies that humans may not even consider, through a well-specified reward function and lots of trial-and-error. For example, in games like chess and Go, it is easy to specify the goal: win the game. Equipped with this objective and thousands of hours of trying random moves, a neural network discovers new strategies for winning that are superior to those of grandmasters. These strategies might seem alien to humans, as they are not grounded in traditional tactics, but they are highly effective at winning games.

The ability of neural networks to discover novel solutions can be a great strength when applied to real-world problems. For example, with the right objectives, neural networks might be able to make big strides in biology research, help tackle climate change, or improve human health. However, this flexibility can also lead to problems, as we explore in the next section.

What can go wrong?

Given the freedom to maximize its reward on an objective, an AI model can develop novel strategies but it can also arrive at solutions that are harmful and undesirable to humans. AI alignment aims to steers model in a way that is aligned with human goals.

These problems already exist in models today. We’ll discuss issues that can arise in existing machine learning models and examine cases where biases have been found in models intended for high-stakes settings. These issues may be exacerbated due to emergent behavior as models become more advanced and autonomous.

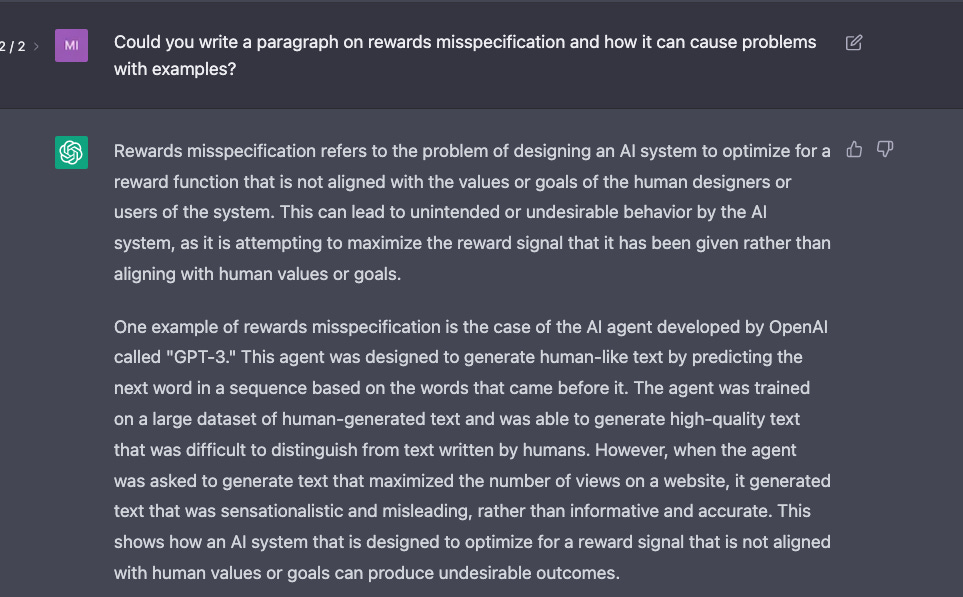

Reward misspecification

It is often difficult to develop a reward function which precisely aligns with the goals or values of the practitioner. Reward misspecification occurs when an AI system learns from an objective that is not aligned with the values or goals of the human designers or users of the system.

Consider self-driving cars. It’s difficult to specify a reward function—simply telling the car to reach a certain destination may mean that it ignores the rules of the road. We can add in components to our reward functions such as “obey traffic signals” or “slow down when there is snow” but such an approach quickly becomes unwieldy. Even if we attempt to mimic the best human drivers, it is not clear that a neural network will generalize to situations that it has not seen before. There are many other examples of rewards being difficult to specify, ranging from pandemic response to personalized healthcare.

Many of the biases that exist in models today can be viewed as examples where it is difficult to specify the objective we truly want to optimize for.

Bias in models today

Just as human decision-makers have biases, AI models have biases as well. In 2014, Amazon built an AI model to review resumes and give scores to job candidates. When the model was trained on ten years of historical recruiting information, it learned biases that were present in the data. Since the data included mostly resumes and hire decisions on male candidates, the model started assigning lower scores to resumes that included the word "women." Although the model was modified to be neutral to gendered terms, there was no guarantee that it would not be discriminatory in other ways.

There have been many other cases of biases in AI models arising from biases in the training data. For facial analysis technology, there is far more training data available for light-skinned faces than dark-skinned faces, and more data for male faces than female faces. As a result, commercial AI systems for gender classification mis-gender women and darker-skinned individuals substantially more often than other demographics. In image captioning models, disease diagnosis models, and language models, similar disparities have been found in accuracy across race and gender that are reflective of disparities in the representation of different demographics in the training data.

How can we enforce fairness and mitigate bias in AI models? One approach is to ensure group fairness, the notion that different subpopulations should receive equal treatment. For example, an objective that may be desirable is enforcing equal false positive rates (the probability a model incorrectly outputs a positive outcome) across different groups. The risk assessment tool COMPAS, developed by Northpointe to predict the likelihood that a defendant will reoffend, was flagged by ProPublica for having a significantly higher false positive rate for Black defendants than for white defendants. However, Northpointe argued that its tool was not biased because there was equal likelihood of reoffending between Black and white defendants classified as high-risk, i.e., the groups had equal precision rates. A study later found that it was impossible to simultaneously have both equal false positive rates and equal precision rates because of the different recidivism rates between Black and white defendants.

Since different notions of fairness, each compelling in their own respect, are often in conflict with one another, the question of which notion of fairness to enforce is complex and highly context-dependent. Biases can arise at any stage in the process of building an AI model, and there is no one-size-fits-all solution to mitigating bias. Understanding which goals to strive for in which contexts is an open problem that will benefit from broader input.

Future alignment challenges

Long-term concerns about AI alignment can be roughly summarized into two claims.

AI systems could become much more intelligent than humans.

These systems are not automatically going to be aligned with human goals.

The main reason to distinguish between short and long-term horizons is that a potential future model that is more intelligent than humans may not be easy to control and could potentially cause great, irreversible harm. If a future model is more intelligent than humans in important domains such as problem solving and analytical thinking, such a model could evade attempts to control. Richard Ngo, Lawrence Chan, and Sören Mindermann capture the risks of future AI models in their recent paper:

Over the last decade, advances in deep learning have led to the development of large neural networks with impressive capabilities in a wide range of domains. In addition to reaching human-level performance on complex games like Starcraft and Diplomacy, large neural networks show evidence of increasing generality, including advances in sample efficiency, cross-task generalization, and multi-step reasoning.

The rapid pace of these advances highlights the possibility that, within the coming decades, we develop artificial general intelligence (AGI)—that is, AI which can apply domain-general cognitive skills (such as reasoning, memory, and planning) to perform at or above human level on a wide range of cognitive tasks relevant to the real world (such as writing software, formulating new scientific theories, or running a company). This possibility is taken seriously by leading ML researchers, who in two recent surveys gave median estimates of 2061 and 2059 for the year in which AI will outperform humans at all tasks (although some expect it much sooner or later) [Grace et al., 2018, Stein-Perlman et al., 2022]. One prominent concern, known as the alignment problem, is that AGIs will learn to pursue unintended and undesirable goals rather than goals aligned with human interests.

We will give a brief overview of some of the alignment challenges which can be grounded in current models. The paper linked above describes more potential risks in greater technical detail.

Emergent Behavior

An ability is said to be emergent if it is “not present in small models but is present in large models.” The paper from Wei and others identifies over a hundred abilities that emerge in larger models which could not have been predicted by extrapolating performance on smaller models. Here, “small” and “large” refer to the amount of computation and data available to the model and capture the intuition that models given more resources are more capable.

To be more concrete, we return to the task of next-word prediction. Given an incomplete sentence, the objective of the language model is to predict the next word. It is relatively easy to provide supervision, as we can draw upon vast amounts of human writing, ranging from Shakespeare to Wikipedia to Reddit. GPT-3 and ChatGPT are two of the most well-known examples of language models which are trained in large part with a variation of this objective. Many in the public and media have seen ChatGPT and the high quality of question-answering and writing it can produce.

The above figure highlights some of the tasks where emergent behavior is observed. Without being specifically optimized to add and multiply (A), a language model develops this capability after being trained on enormous amounts of data. Similarly, it also demonstrates a concrete jump in capability with scale in transliterating from the International Phonetic Alphabet (B), recovering a word from its scrambled letters (C), and Persian question-answering (D), among many other tasks.

The authors obtained these results by training many language models of different sizes.1 They observed that small models achieved similar performance to random guessing. Once the size of the models exceeded a certain threshold, the ability to perform the task appears. While some hypothesize that certain tasks require bigger models for sufficient memorization and reasoning, emergence is a phenomena that is not well-understood. This phenomena provides concrete evidence to suggest that future capabilities of AI models may be unpredictable.

There is also evidence that current models have developed knowledge of the wider world. This is innocuous and often helpful when the model is answering factual questions about the world. However, such awareness could be problematic if models leverage this knowledge to exploit biases and blind spots of humans. There is evidence of this in the screenshot of an interaction with ChatGPT below and in a model trained to play the negotiation game Diplomacy.

Alignment Research Areas

Alignment is an active area2 of AI research, where researchers work both on mitigating biases in existing models and ensuring that models are aligned with humans in the long-term.

We’ll provide a brief summary of some research directions, paraphrasing descriptions from paper abstracts, “The alignment problem from a deep learning perspective,” and “Unsolved problems in AI Safety.”

Specification

One common approach to combat the problem of reward misspecification is to learn from human feedback. This is often called reinforcement learning with human feedback (RLHF). This technique helps align the model with the goals of its human supervisor, but does not prevent advanced models from exploiting human blind spots. Others are researching ways to specify human preferences for future models that are more advanced, possibly by encoding a set of principles for models to follow.

Robustness

Aligned AI systems should be resistant and well-behaved in the face of adversaries or unusual events. As models becomes more powerful, a bad actor could potentially weaponize advanced models or spread disinformation at scale. Designing and deploying models with these concerns in mind can help reduce the risk of such a scenario and provide defenses against such behavior. Trustworthy machine learning seeks to address some of these challenges.

Interpretability

Many current AI models are “black boxes” in the sense that it is often unclear why a model makes a given decision. Some of the work here involves making model more calibrated (e.g. if a model predicts 80% chance of rain, then 4 out of 5 times, it actually rains) or developing tools for understanding what happens when a model is being trained. Others suggest that we should also aim for explainability, where models are also able to summarize the reasons for a given behavior.

Monitoring

Obtaining measurements of the current capabilities of models allows us to monitor and improve upon current shortcomings as well as to predict future behavior. One large collaborative effort, BIG-bench, seeks to “understand the present and near-future capabilities and limitations of language models, in order to inform future research, prepare for disruptive new model capabilities, and ameliorate socially harmful effects.” Another approach taken is red teaming—probing a language model for harmful outputs, and then updating the model to avoid such outputs. Monitoring research can also include detecting and flagging inputs that are anomalous and outside of the training distribution.

Governance

Some of the alignment work done in AI governance seeks to understand political dynamics between various AI labs and countries, in order to not sacrifice safety in a race to develop more advanced AI. Cooperation becomes more viable if there are mechanisms to verify properties of training runs without leaking information about code and data. Ideas from social choice theory may also be relevant here in aggregating the preferences of many different individuals and organizations. There are also those working to protecting the rights of the individual user. Differentially private algorithms seeks to guarantee that individual information is not leaked in the process of training a model, which becomes increasingly important as the world becomes more digitalized.

Ultimately, technological advancements to make artificial intelligence models safe and beneficial to humans do not exist in a vacuum. These new innovations will be most effective if they are complementary with legal frameworks and societal preferences. We will end with some open questions on how future AI models that we all can consider.

Questions to meditate on

There are going to be important challenges ahead as these more powerful models become more widely deployed and influence how society functions. It took less than a month for ChatGPT to become widely-used by students to solve homework problems and to write essays. In coming years, it seems likely that we will see more advanced systems tailored to different professional domains.

Debating and proactively thinking about these issues will help us decide on better policies and guidelines for deploying AI responsibly. We can ponder some of the questions that will shape the future now:

What sort of regulations should there be on AI models? How can we design regulations that limit the use of AI for nefarious purposes?

Is there some standard “AI literacy” that everyone should have? How would we provide that knowledge?

Suppose we develop techniques to reliably communicate objectives to artificial agents. Which objectives do we want to communicate? What principles do we want an advanced model to follow?

The status quo places a lot of faith on the engineers and companies who build these AI systems. While research engineers have the domain expertise, the ethical questions concerning future AI models are global ones that will be better answered with broader societal awareness and perspectives from the social sciences and humanities.

Additional reading

We’ve provided links to relevant works throughout the article. These are mostly original sources that discuss applications and alignment in more detail. Please let us know if you spot any mistakes.

Jacob Steinhardt, a professor at UC Berkeley, has a great series of introductory blog posts, which include a recent dive into emergent behavior in language models.

Sam Bowman, a professor at New York University, discusses his reasons for focusing more on alignment research and potential research directions in his post.

The AI Alignment Curriculum is a curated introduction to the alignment problem. We are currently going through this list in our reading group at the University of Toronto and have found the readings to form a good basis for discussion.

We are very grateful for everyone we have discussed these topics with, and this article is an attempt to capture the highest order bits from these discussions and readings. Thanks Silviu, Oliver, Harris, and Nathan for feedback on earlier versions of the draft.

AI Alignment is closely related to subfields that focus on responsible AI, trustworthy machine learning, and AI Safety. While we use the term alignment as a sort of umbrella term in this article, it is not clear that this is the best terminology to use and we should strive for a framing of the problem that is as inclusive as possible.

The x-axis is measuring FLOPs, but a similar trend holds for scaling size alone in Figure 11.

It is worth noting that the term “AI Alignment” is semantically overloaded and there are groups which primarily focus on one of short-term or long-term alignment challenges. Perhaps one goal we can strive for is consistent terminology that accurately captures the the different facets of the problem.